Tutorial

■ Introduction

In this tutorial we are going to build a small chat application: we will be able to login, type messages and also have a screen with the message history.

It is not a trivial application: we will need a client side application, that runs in the browser, and a server side application, in our case a node application.

We are going to use an LLM to make the architecture of the application and to write the actual code. For this tutorial we are going to use CODEX (OpenAI) as our LLM, but we could have used any other coding LLM available out there.

We assume that you have installed the vmblu vscode extension and the vmblu runtime and cli (see quickstart if you haven't).

■ Setting things up

Because we are going to use an LLM we use the vmblu init command to set up the initial project structure. It includes the files needed to let an LLM understand the model file format:

npx @vizualmodel/vmblu-cli init chat-client

npx @vizualmodel/vmblu-cli init chat-serverIf you have installed the command globally you can of course just simply use:

vmblu init chat-client

vmblu init chat-serverWhen the command has executed it shows the files and directories it has created for the chat-client:

C:\dev\vmblu.dev\tutorial\chat-client/

chat-client.vmblu

chat-client-doc.json

package.json

llm/

seed.md

manifest.json

vmblu.schema.json

vmblu.annex.md

profile.schema.json

session/

nodes/..and for the chat-server:

C:\dev\vmblu.dev\tutorial\chat-server/

chat-server.vmblu

chat-server-doc.json

package.json

llm/

seed.md

manifest.json

vmblu.schema.json

vmblu.annex.md

profile.schema.json

session/

nodes/The files that have been placed in the llm/ directory describe the format and semantics of a vmblu file and allow the LLM to build the architecture of the system.

Now we are ready to make our first prompt for the application. What we want is a server that stores messages and that has a simple login procedure. On the client side we want a login window, a window to type messages and a window showing the messages that have been exchanged.

The package.json file contains the minimal setup required for the apps, including the vmblu-runtime and the vmblu-cli. If you need specific tools (vite, typescript, etc) you have to add these to the file or install them separately. You can then initialize the node_modules folder by calling npm install in both directories.

■ The first prompt

This is the prompt we used to get the process going:

We are making a simple chat application. The chat application consists of a chat

server and a chat client. The directory for the chat server is ./chat-server and

for the chat client ./chat-client.In order to develop these applications we are

going to use the vmblu editor.You can find the documents that you should read

before making the app in ./chat-server/llm/seed.md. The same documents also exits

in the chat client, but these are the same.We will make the application in two

steps: first we will make the architecture in the respective .vmblu files

and then we will write the code for the nodes in that architecture.

The chat-client should have a simple login window where the user just has to type

his name before starting the application. Then there should be a window where the

user sees the message history, with his own messages in a green bubble to the

right of the window, and messages from other users in a blue bubble to the left

of the window. Below that window there should be a window where the user can enter

new messages.

The chat server should keep track of the messages and when a user logs in, send

the message history to the client. The chat server is a node.js application.

When you are ready with the architecture, chat-client.vmblu and chat-server.vmblu,

we will first discuss that and then write the source code.With this prompt Codex started grinding and then came up with the following result:

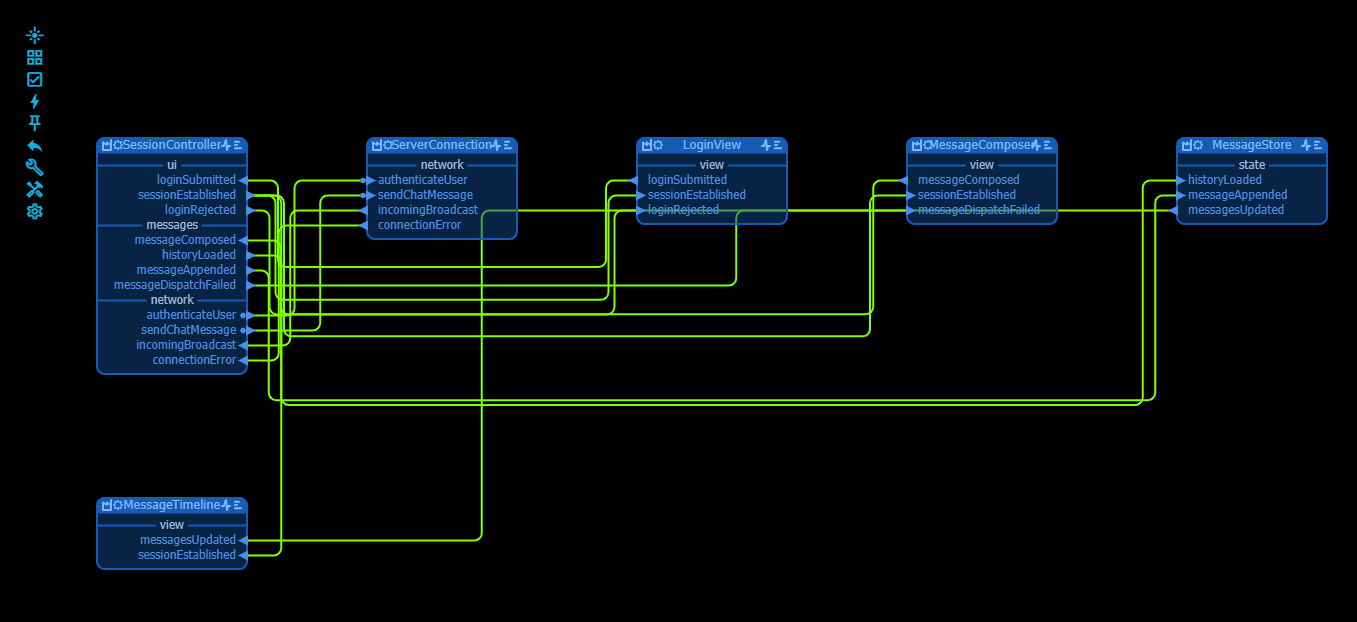

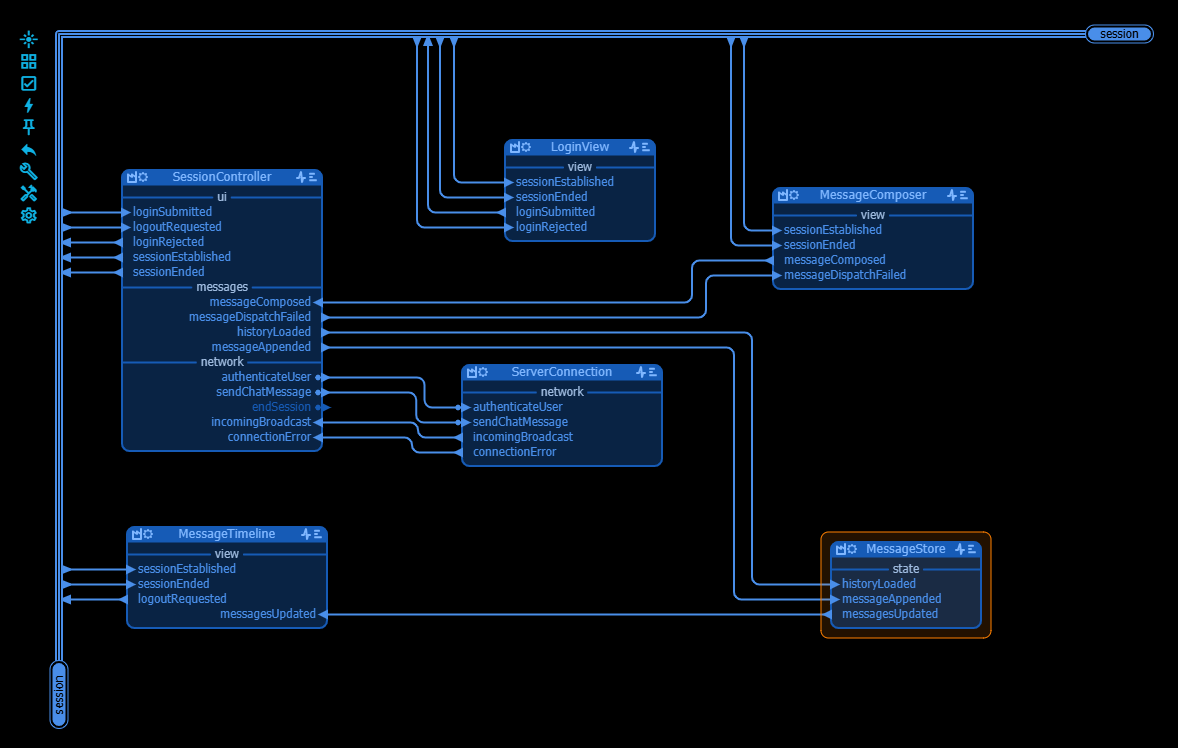

The LLM specifies what nodes are required, the input and output pins for each of the nodes and the connections between the nodes. The diagram looks a bit messy, because it is the the editor - not the LLM - that does an automatic placement of the nodes and routing of the wires to visualize the connections.

The routes are green because they are new, so the first thing we do is accept the changes and then we rearrange the nodes a little. We also add a busbar because the sessionEstablished message goes to three different nodes. All this very straightforward and takes just a couple of minutes and finally we arrive at the following result:

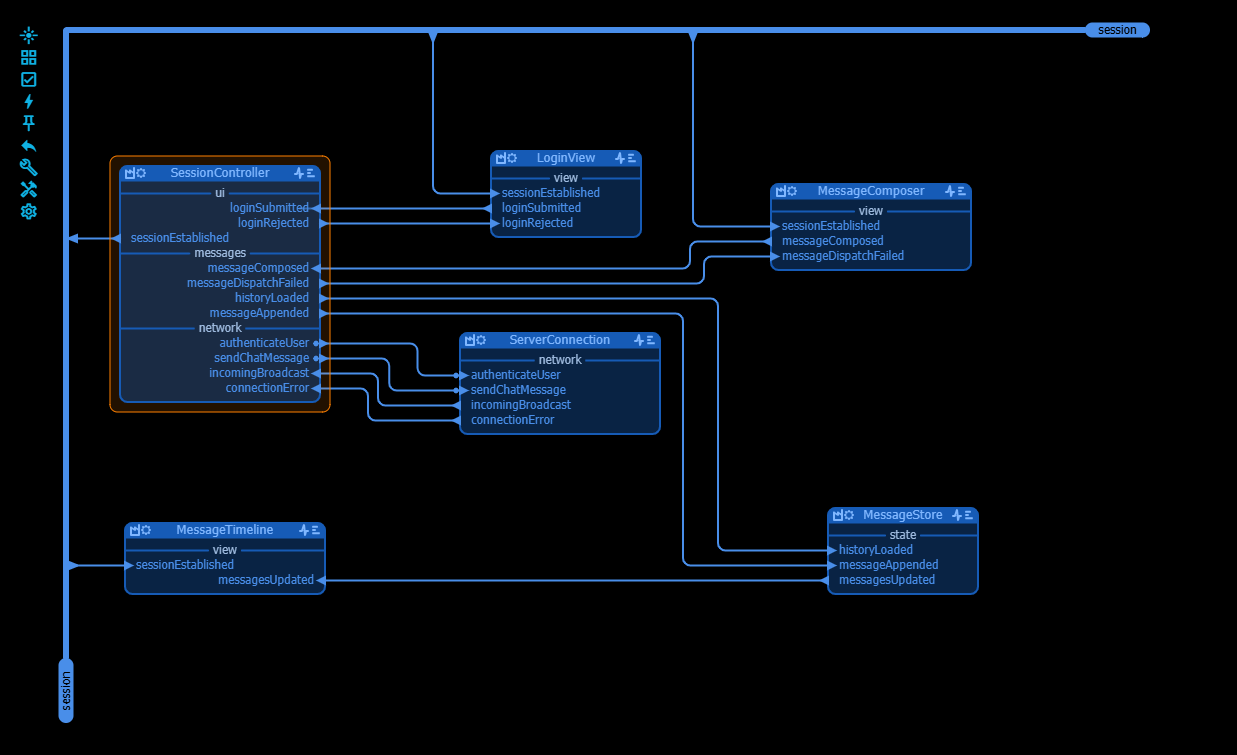

Looking at this architecture proposal, you can get already a pretty good idea what is going on in this application.

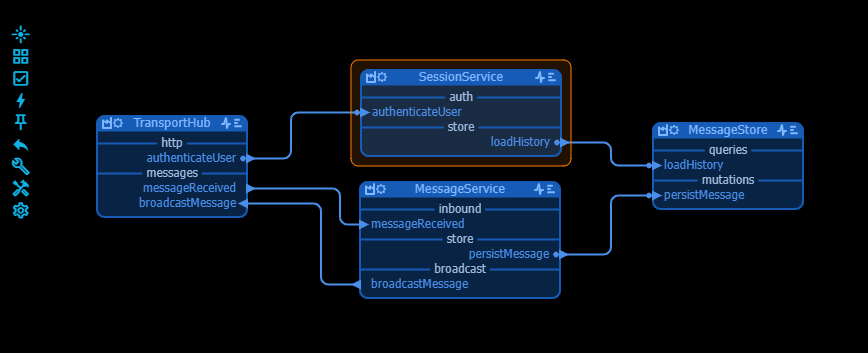

The LLM also produced the architecture for the server. The server is fairly straightforward, and after a bit of re-arranging this is what it looks like:

The LLM also explained what it had done and suggested the next steps. Note that up to this point not a single line of code had been written - just the layout of the architecture had been made.

Architecture Update

* Defined ChatClientApp group in tutorial/chat-client/chat-client.vmblu:21 with source

nodes for orchestration (SessionController), transport (ServerConnection), UI

(LoginView, MessageComposer, MessageTimeline), and local state (MessageStore),

wiring login, messaging, and error flows between them.

* Connected client networking requests (authenticateUser, sendChatMessage) to server

transport replies and routed history/message updates through the store so UI

components stay in sync (tutorial/chat-client/chat-client.vmblu:26,63,83,102,121,140).

* Established ChatServerApp group in tutorial/chat-server/chat-server.vmblu:19

featuring TransportHub, SessionService, MessageService, and MessageStore, separating

concerns for transport, authentication, persistence, and broadcasting.

* Linked server requests and events so login fetches history and message publishing

persists before broadcasting to connected clients

(tutorial/chat-server/chat-server.vmblu:24,48,71,100).

Next Steps

* Review the proposed client/server node responsibilities and message routes,

adjust if any behaviour needs to shift before coding.

* Once aligned, generate the corresponding node implementations under

tutorial/chat-client/nodes and tutorial/chat-server/nodes, then wire up runtime tests.This looked all very reasonable, so we prompted the LLM to go ahead and write the code for the client and the server:

The architecture of the client and the server look ok to me.

You can continue now and write the code for the nodes.

Use svelte for the UI nodes in the client.

Use ESM not CJS, also for the server.

Put the different nodes in separate files also.■ Writing the code

The LLM started crunching away for about 20 minutes, and wrote 14 files of typescript and svelte components. We had given very limited instructions about the core technology to use, so the LLM chose to write in typescript and use websockets for the communication between client and server - very reasonable choices.

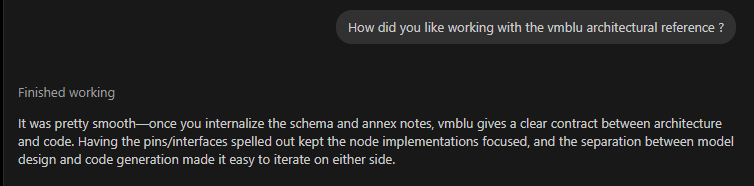

Polite as we are, we asked the LLM about the experience:

In the LLM documents is clearly indicated that the LLM does not have to make the 'main' application, just the nodes, so in order to get something runnable, you have to generate the application from the vmblu file, which is straightforward: just use the make app icon in the main menu.

With that in place we could have a go at getting the application up and running.

■ Getting the server up and running

Next we had to fiddle a little bit with the development environment. For the server app (in the ./chat-server folder) we needed the typescript compiler and the type information for node.js and websockets and the LLM had to edit one of the files to add type information.

npm install --save-dev @types/node

npm install --save-dev @types/wsWe also had to set up the typescript compiler

npm install --save-dev typescriptWe compiled with npx tsc and ran the server with one final snag: the default port proposed by the LLM was already in use, but that was fixed in no time.

To run the server open a terminal window, go to the ./chat-server folder and run

node ./chat-server-app.js■ Getting the client up and running

Here we also had to set up the typescript compiler, but now we simply asked to setup package.json correctly so that we just had to do a npm install to get everything we need (the compiler, vite, svelte), we also had to upgrade our version of node because vite needed it, but that happens.

Oops - index.html file missing to launch the client under vite (we will add an option to create it when the app is created - issue submitted).

Then there were still a few problems to solve with the vite and svelte integration, but the LLM knew how to set up the config files correctly.

Ran npm run dev again, and now it all worked...almost. There were a few errors with null values, but after a brief discussion with the LLM it was clear that in the model schema it was clear that sx (settings for a node) could be null (issue submitted).

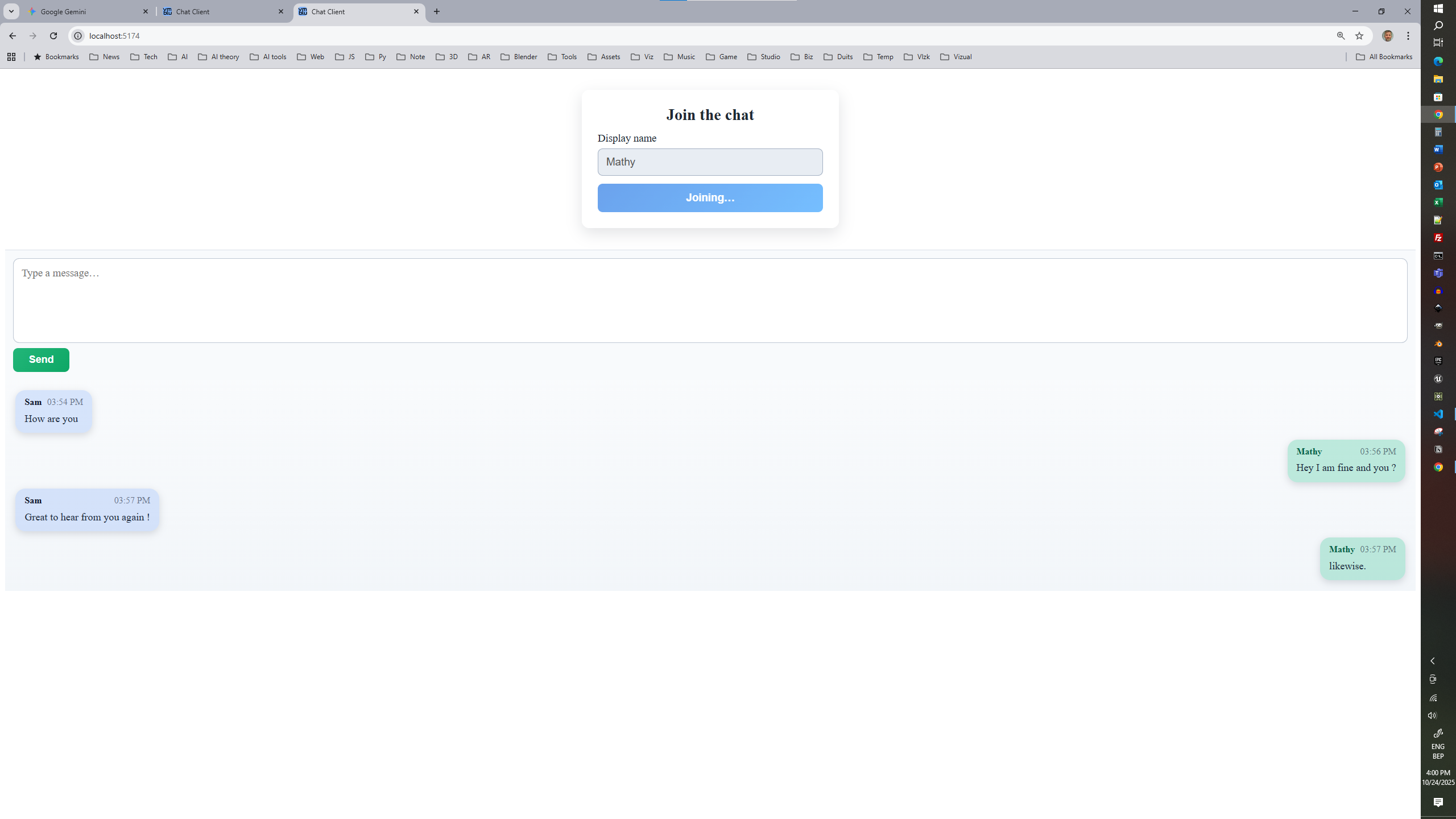

Then I ran into a small problem with svelte. Svelte has changed some of it semantics going to version 5, particularly regarding how components are created, but again quickly solved. And also the client is up and running and shows this initial login-screen:

■ Testing end to end

It turned out that the change to svelte 5 was more involved then initially expected, so the LLM rewrote large parts of the svelte code to accommodate for that.

Next the login was not working as expected and a new user could not be authenticated, but again it turned out a small error and now the application was running end to end. I can run a server and several clients and these clients can exchange messages.

Now I could have a look at the esthetics of the app. So I requested a couple of changes: get rid of the login window after login, put the history above the message entry iso below and don't make the overall window too large. And just for good measure I requested to add a logout button because that was the only change that would require a few small changes to the architecture.

1. I think the login window should disappear when the user is logged in.

Maybe it can be some modal window with the background blurred if not

logged in and after login it disappears.

2. The message composer window should be below the message history window.

3. The width of the composer and history is too large for comfort,

specifically on my large screen, so narrow it down to a value

that works well on multiple screen sizes.

4. There should be a logout button that brings back the login screen.

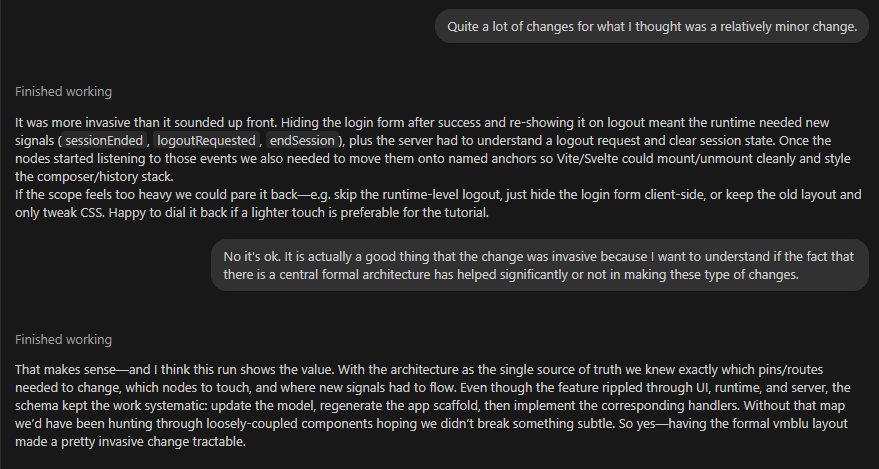

For this you might have to change the architecture of the chat-client first.Now it turned out that making these changes was a lot more involved then anticipated, particularly the logout change, so I asked the LLM about the experience:

The LLM had made the required changes to the model, we just rearranged the routes and changed the busbar to a cable that now routes all the login/session traffic. After the changes the model looks as follows:

Everything compiled nicely and I could login, but the input screen was not responsive. I got into a debate with the LLM about this problem but after about half an hour we solved this css-related problem and the app worked nicely !

■ Conclusion

Building the application was a breeze, and despite the many files that were created, the vmblu model made a huge difference in understanding how the application was built by the LLM. Also being able to navigate the code directly from the vmblu model was a great help. The vmblu model as the authorative context for the system also kept the LLM on the rails, even when considerable changes to the application had to be made.

We also discovered some areas for improvement in the instructions for the LLM: the names given to the pins were a bit long and the LLM could have made better use of combining the interface names with the pin names, something we will tackle in the LLM instructions. Also in the editor the automatic routing of connections between nodes needs some improvement. It is very easy to rearrange nodes, pins and routes to come quickly to a visually more pleasing layout, but part of that should be taken care of by better automatic routing, something we are also working on.

All the files of this tutorial can be found here: Tutorial